DiaCorpus

a collaboration project with the Israel Innovation Authority

The goal of this project is to develop of a large, comprehensive, and annotated corpora in Israeli/Palestinian Arabic dialect. The project is part of the National NLP Plan of Israel. I am the lead data scientist of this project, which consists of 12 more researchers and two teams of 6-10 annotators each.

The endeavor encompasses two distinct undertakings:

a. Spoken Arabic Corpora Creation

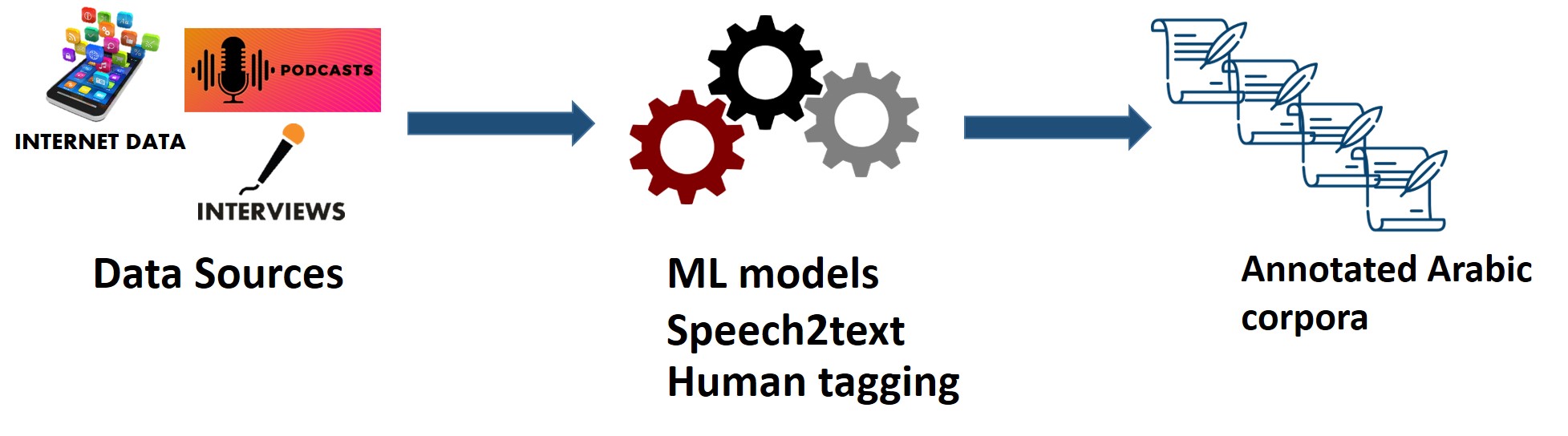

Creating dialectic Arabic corpora from recorded speech -- podcasts and interviews.

- Date Sources: publicly available podcasts, and personal interviews.

- Data Transcription: automatic Azure speech to text technology and manual spelling corrections.

- Data Annotation: each text chunk is humanly annotated according to three NLP tags: sentiment, emotion, and NER.

- Modeling: per task, we build and publish an NLP predictive model.

Corpora creation flow - spoken Arabic.

Personal interviews we recorded as part of the project. The pictures were taken by Julian Jubran , the interviewer of all participants in the project.

b. Textual (dialectical) Arabic Corpora Creation

Creating dialectic Arabic corpora from textual sources for three distinct NLP tasks-- sentiment, coreference resolution, and summarization.

- Date Sources: dialectical Arabic texts (e.g., Twitter data, transcribed podcasts).

- Data PreProcessing: data filtering and NLP pre-processes (e.g., tokenization).

- Data Annotation: human annotation of the text, per task (i.e., sentiment, coreference resolution, and summarization).

- Modeling: per task, we build and publish an NLP predictive model.